The December 2020 Google Core Update

Once again, news publishers have been negatively affected by an algorithm update in Google search. Let's talk about these updates and what they mean for publishers.

This week I was going to write about optimising images for news articles, but then Google decided to roll out a massive algorithm update that seems to have had a profound impact on news websites.

So let’s talk about this update, and previous Google updates, and what they mean for news publishers.

The relationship between Google and news publishers is a layered and complex one. In a very tangible sense we can partially blame Google for the decline in revenues for publishers, but on the other hand the search engine is also responsible for featuring news prominently in its results (even when it doesn’t have to) and sends millions of visits to news websites every day.

In the first edition of this newsletter, I showed how Google uses news in its search ecosystem and explained that the Top Stories news box was where the magic happens for most publishers.

The Top Stories box is a search element, which means its governed by more or less the same algorithms that Google uses to rank its regular search results.

When a Google algorithm update rolls out, and the regular search results change (sometimes rather dramatically), we see similar effects in Top Stories. A publisher that loses ground in regular Google results will also see diminished visibility in Top Stories. So these algorithm updates have a clear and direct impact on the traffic that Google sends to publishers.

Newspocalypse

My first hands-on experience with Google’s fickle whims came in March 2018, with an algorithm update that I’ve started calling the ‘Newspocalypse’.

It was limited primarily to the UK media landscape, and affected a range of popular news websites like the Daily Mail, The Sun, The Express, and the Mirror:

This graph is from a tool called SISTRIX. It’s a great SEO platform which, among many other features, has a Visibility Index. This VI is based on daily and weekly monitoring of the top 100 ranked results in Google for over a million keywords. The SISTRIX Visibility Index gives a pretty good gauge of a website’s overall footprint in Google, and I often see strong correlations between these VI graphs and a website’s actual search traffic trends.

The graph shows a huge shift in visibility for these UK news publishers in March 2018. They all lost a lot of visibility in Google (and a lot of traffic as a result). Up to that point, UK news publishers had felt little impact from Google’s algorithm updates and could focus their SEO efforts on beating their competitors.

After March 2018, these publishers suddenly had to contend with another variable: Google’s opinions of their content.

In my analysis of this March 2018 update, the broader picture I managed to distil was one of topical focus. Essentially, prior to this update the UK’s major publishers had free reign to write about anything and everything, and they’d be able to achieve strong rankings in Google with articles on almost any conceivable topic.

After this update, that was no longer the case. Google had given these publishers a limited space to work in. Google allowed these sites to rank on a specific range of topics, but not beyond.

It was a ‘stay in your lane’ type update that limited the publishers’ scope for achieving visibility. After this update, if they wrote content that stuck to their strengths as publishers then yes Google would rank their content. If, however, they wrote about topics that they weren’t experts at (according to Google), then that content would not rank and not be shown in Top Stories.

It wasn’t the last time Google would unleash its wrath - several other updates have resulted in huge visibility changes for many of these publishers.

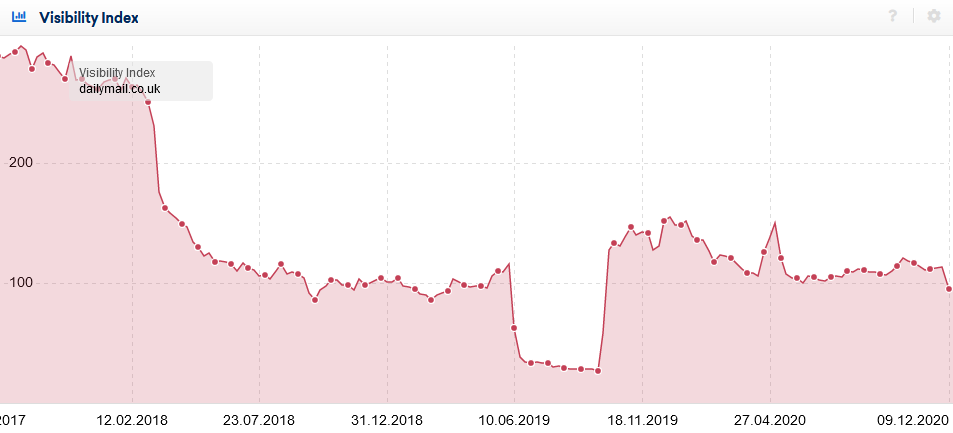

Take the Daily Mail, for example. It’s been on the receiving end of Google’s wrath several times - often negatively, sometimes positively:

The site never recovered from the March 2018 Google update, and was again decimated in the June 2019 algorithm update.

That update seems to have been ‘corrected’ in September 2019, when Google made another core algorithm change that reinstated the Daily Mail’s visibility to earlier levels (but still half as much as the pre-March 2018 peaks).

The latest update from this past week is once again having a negative impact on the Daily Mail site, with a strong loss of visibility. It’s not as severe as previous updates have been, but it’s still a meaningfull loss.

Deserved?

For the Daily Mail site, it’s easy to come up with justifications for the severity of Google’s updates. The Mail has a specific approach to their content, which is part of their online brand identity and works well for keeping readers engaged with the site. It’s not a big leap to judge that editorial approach as ‘low quality’ and deserving to be punished by Google.

I don’t necessarily agree with that perspective, but I understand why others would come to such a simplistic conclusion. People could make the case that the Mail ‘deserved’ its losses.

It’s harder to maintain that stance when publishers like the New York Times, the BBC, and CNN.com are affected. Which is exactly what happened in this latest core update.

Data gathered from SISTRIX by the folks at Path Interactive shows that the nytimes.com, bbc.com, and cnn.com domains all saw significant losses in the visibility index. These sites have been affected by Google’s changing approaches to its rankings many times over the years - for better and worse.

As these are publishers of what almost everyone would consider ‘quality content’, you do have to wonder what Google is thinking when it decides to roll out an update that punishes these news sites.

When asked about these core updates and how sites could potentially ‘recover’ from them, Google’s representatives point to a blog post that tries to explain what Google is looking for in terms of content quality and expertise.

I won’t quote the blog in detail - you can read it for yourself here - but I do want to highlight some specific passages:

Does the content provide original information, reporting, research or analysis?

Does the content present information in a way that makes you want to trust it, such as clear sourcing, evidence of the expertise involved, background about the author or the site that publishes it, such as through links to an author page or a site’s About page?

If you researched the site producing the content, would you come away with an impression that it is well-trusted or widely-recognized as an authority on its topic?

Is this content written by an expert or enthusiast who demonstrably knows the topic well?

These are questions that Google suggest you ask yourself when reading content from websites - and if the answer is ‘no’, then it is possible the website can fall on the wrong side of a Google algorithm update.

Imagine sending that to someone at CNN or the New York Times as an ‘explanation’ of why their site has been punished by Google. I mean, seriously, think about it.

These are news publishers whose raison d’etre is to provide exactly the kind of content that Google purports to reward.

So when Googlers point people who query their core algorithm updates to this waffling documentation, it’s hard not to take offense. It’s a Pontius Pilate move, not intended to provide any real clarity or guidance. It’s Google’s ‘not my problem’ card.

It’s the Machines

And Google pulls this card for very good reason. Which is that, essentially, Google’s own engineers don’t really know why their search system does what it does.

For years Google has been working to integrate machine learning systems into its web search algorithms. These machine learning systems - you could call them Artificial Intelligence - are necessary because the web is too big and complex for human engineers to code algorithms for.

The web is too chaotic and too unpredictable for humans. It’s become impossible for human engineers, no matter how clever and motivated they are, to craft ranking algorithms that can account for such chaos.

Google realised fairly early on that, by utilising AI systems, they could move away from manually coding ranking algorithms that try to account for every possible variable. Instead, they would train up AI systems to recognise ‘quality’ (and the lack of it), and let the AI decide which sites should rank highly and which shouldn’t.

The core algorithm updates we’ve seen Google roll out these past few years have been, to a large extent, all about Google’s efforts to integrate these AI ranking systems into their search engine.

Each update is an improved version of previous AI systems, or the roll-out of a new AI system intended to improve a specific topical area of search such as medical or financial content.

If you have an hour to kill, Google released a video in October that gives a behind the scenes peek at the roll-out of an algorithm update. It’s interesting viewing:

I imagine Google intended that video as a bit of a PR exercise, showing the humanity behind the decisions they make about their ranking systems. And it certainly accomplishes that.

But it also shows how Google is increasingly handing over the reigns to its AIs when it comes to deciding how websites rank in its results.

These algorithm updates are always heavily tested before they’re rolled out to the entire search engine. And these tests show certain win & loss percentages, based on evaluations of the ranking changes. If a proposed algorithm update meets a certain treshold of wins vs losses - i.e. it improves Google’s search results more than it worsens them - then it qualifies for roll-out.

A lot more goes into it, of course, much of which goes way over my head. But, in essence, Google increasingly relies on AI systems to improve their search results, where each core update is deemed to be a net improvement.

More importantly, due to the nature of machine learning systems, no human really understands how the AIs reach their conclusions. We can judge the quality of an AI’s output, but we don’t necessarily understand how the AI reached that point.

(As a sidenote, this increasing reliance on ‘black box algorithms’ goes way beyond Google. I highly recommend Frank Pasquale’s book on the topic.

It also makes Google’s recent firing of one of their prime AI ethicists even more worrying. But that’s a different topic entirely.)

Collateral Damage

Of course, a ‘net improvement’ in rankings means there are some deteriorations as well. The overall impact of an algo update may be a net positive for Google’s results, it will inevitably cause some sites that may not ‘deserve’ it to be negatively impacted.

Collateral damage, basically. Google elevates some sites, and that means others lose out - even if they’re good sites themselves.

Ranking in search isn’t entirely zero-sum, but it’s not far off. When one site wins, others lose. And that’s exactly what happens with these algorithm updates.

Reading between the lines of Google’s attempts at communication about these core updates, that’s what they’re really trying to say. But they can’t come out and explicitly say “sorry you’re a loser on this one - better luck with the next one!” That’s too blunt and probably leaves them open to some form of litigation.

So they hide behind double-speak and hollow ‘great content’ mantras and hope we just shut up and get back to our many other worries.

Which most of us do, of course. We don’t have a choice. We can’t fight Google - they’re too big and too rich.

News publishers, on the other hand, have a bit more leverage. Politicians read the news, because the public reads the news. So it’s in Google’s interests to keep news publishers placated to a degree.

That’s how you get programmes like the Google News Initiative, spreading around some of the crumbs that fall from Google’s multi-billion dollar table. Since 2018, Google has given $168 million in funding to news organisations around the world through its News Initiative.

And there’s the the recently announced News Showcase endeavour, where Google aims to hand out a billion dollars to publishers.

To give some context to those numbers: based on their 2019 revenue figures, it takes Google less than half a day to make $168 million and a bit over two days to make a clean billion.

But, hey, it’s decent money for publishers, and a cheap way for Google to keep publishers somewhat docile so they don’t sic the regulators on them.

How can you recover?

If your publishing site has been hit by a Google update, there could be some ways you can try to recover.

I’ve seen some examples of publishers culling ‘bad’ content (however that’s defined) and enforcing a tighter focus on specific topical areas where their editorial expertise was strongest. This resulted in positive gains in the next algorithm update.

And that’s always the crux: you have to wait for Google to tweak its AI systems again to have a shot at recovering from the damage done by the previous update. It’s very rare to recover your Google visibility through your own hard graft alone - most often, you need Google to change its mind about your website after you’ve ‘mended your ways’, so to speak.

If your site has been affected by this latest algorithm update, take comfort in the knowledge that you’re not alone. Your frustrations are shared by many.

And we’re not really in a position to do much about it. We all play Google’s game, and they can change the rules when it pleases them.

It’s a complex situation. On the one hand, I appreciate that as a private company Google is free to do whatever it damn well pleases with its software.

Yet on the other hand, we are talking about a behemoth that has a global marketshare of nearly 90%. Google is a monopoly, and that means it has to play by different rules.

As Uncle Ben said, with great power comes great responsibility. Google certainly has the power - whether they have the responsibility, well, the jury is still out on that one.

Miscellanea

There was some actually useful content published by Google recently, specifically two support documents that are especially worthwhile for news publishers:

This support document contains answers to a variety of questions people have asked about the upcoming Page Experience update Google intends to roll out in May 2021.

At some stage I’ll probably dedicate an entire newsletter to Core Web Vitals and Page Experience, as it’s a worthwhile topic to explore.

In this article, Google provides some guidance to large site owners about managing Googlebot’s crawl efforts and ensure the right pages get crawled. Nothing too groundbreaking in there, but a good primer to share with the relevant tech folks in your organisation.

That’s it for this third edition of the newsletter. If you found this interesting, please share it with your colleagues and industry friends.

For more of my SEO-related ramblings and occasional (and often misguided) rugby commentary, you can follow me on twitter: @badams

Thanks for reading, and until the next one!

Cheers,

Barry

Hi Barry, regarding this part:

Google had given these publishers a limited space to work in. Google allowed these sites to rank on a specific range of topics, but not beyond.

Is this statement your opinion and/or the result of analysis and studies in this regard, or does it derive from official statements by Google that have confirmed this nature of the update?

Hi Barry,

Suppose there is an algorithmic change to Discover, Top Stories, or Google pushes a Core Update. The impact on websites might differ as we see this December Core Update but when Google Search rolls out such an update is the same rolled out to all websites indexed in SERP?

I am just trying to draw a parallel to what was done with GNews and understand if what GNews did (impose quality checks on Newish News websites while giving existing News websites a wildcard) is also done by GSearch or the folks at GSearch have enough understanding and always push the algorithmic change on all websites indexed in SERP?

Basil