Google's AI Adventure - One Year On

A year after the introduction of AI Overviews, let's look at the impact on publishers' traffic and visibility and what's next in store for AI in search.

Earlier this week I gave a talk at the WAN-IFRA World News Media Congress about the current state of Google’s AI Overviews, the impact on traffic, and the broader evolution of search in other LLM chatbots (see my slides here). In this newsletter I will try to summarise the essence of my talk, as well as expand on some areas.

AI Overviews in 2025

Almost a year on from Google’s announcement of AI Overviews (previously in beta as the Search Generative Experience) we now have a pretty solid grasp of its impact on search and the wider web.

AI Overviews are now active in over 100 countries globally. For many informational queries, users will see an AI-generated snippet at the top of a Google search, with the option to expand the snippet for more detail.

A typical AI Overview will cite sources for the information it provides, with on average around 6 sources being cited. The AI Overview will provide anchor links for each of its claims, which then shows one or more links to webpages that purportedly contain the information the AI Overview is citing.

The percentage of Google searches that show an AI Overview is a point of contention. It appears to vary from industry to industry, with some being overwhelmed by AI Overviews. Data from BrightEdge shows that 76% of Google queries in the entertainment category will show an AI Overview.

Looking at search query data in aggregate, Sistrix says that in the UK the prevalence of AI Overviews is now around 18%, which is a sharp increase compared to the previous average of 4%.

That means that currently nearly one in five of all Google queries in the UK will show an AI Overview. That percentage is likely to rise more as Google’s confidence in AI Overviews increases. Other studies show similar percentages and strong growth of AIOs in numerous verticals.

The impact on traffic is meaningful. The latest data from Ahrefs indicates that a top ranked organic page will on average see a 34.5% loss of clickthrough rate if an AI Overview is shown above the organic results. This mirrors other studies, for example this one from Seer Interactive, that show similar declines in clickthrough rate when an AI Overview is shown.

Despite Google’s earlier claims, it’s now overwhelmingly clear that AI Overviews drive fewer clicks to webpages, even when a page is a cited source in the AI answer.

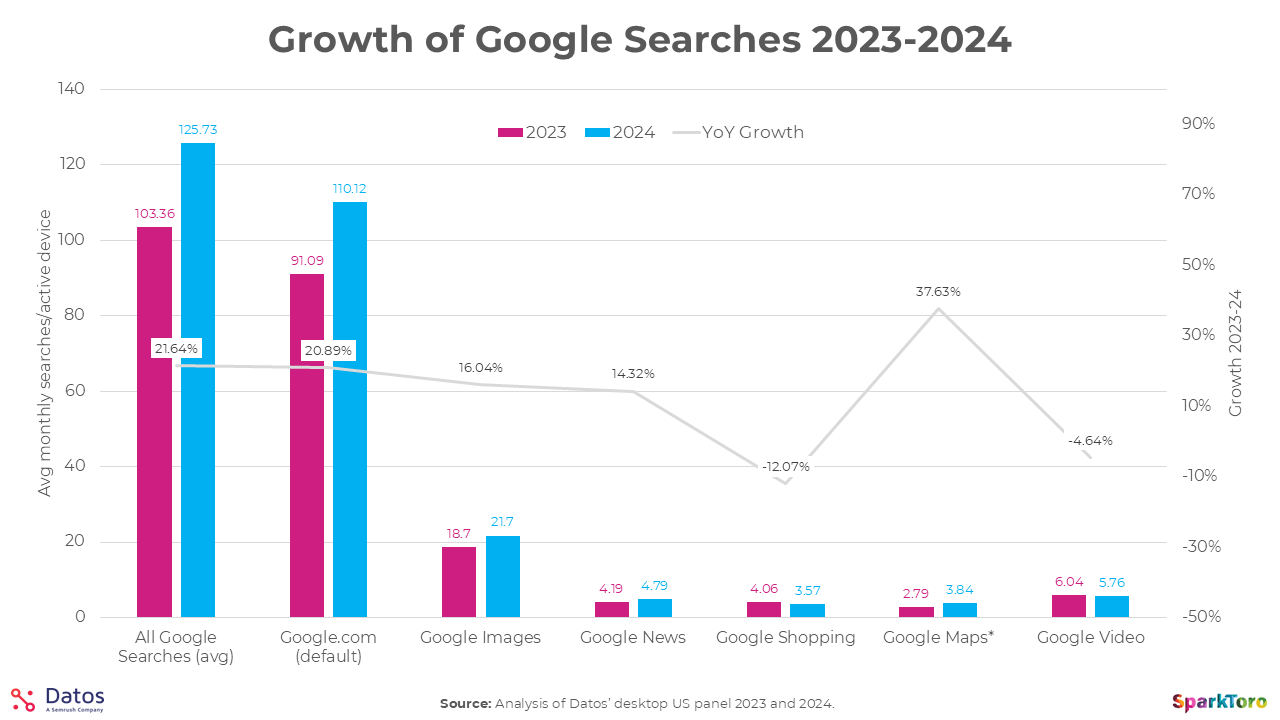

Nonetheless, there is some good news. The loss of clicks is a least partially mitigated by strong YoY growth in Google queries. Numbers collected by Datos and visualised by Sparktoro show that there was a 21% rise in Google queries in 2024 compared to 2023.

The theory is that extensive usage of LLMs like ChatGPT and Copilot drives increased Google usage. The likely causation is users wanting to verify the generative AI output with actual trusted sources, turning to Google to find those sources.

The second bit of good news is that news-focused publishers have less to fear from AI Overviews. With very few exceptions, a Google search that contains a Top Stories carousel will not have an AI Overview. Back in 2024, Google’s Liz Reid said that they “aim to not show AI Overviews for hard news topics, where freshness and factuality are important”. So far that has proven true.

Publishers that are heavily dependent on Top Stories news boxes on Google for their search traffic are not experiencing significant traffic losses… yet.

Optimising for AI Overviews

When engaging with AI Overviews, expanding the answers and clicking on the cited sources, you’ll quickly find commonalities among many of the webpages that Google will link to in its AIOs.

More often than not, these webpages will be structured in a certain way. The content will be divided - ‘chunked’ if you will - into concrete sections, each answering a specific aspect of the broader query.

For example, if it’s a webpage about tooth ache after an extraction, the page will have different sections on likely causes of tooth aches, how to manage the pain, when to seek medical advice, etc. Each of these sub-questions are highly relevant to the higher-level question around tooth ache, with each subquestion serving as the focus of a section of content on the webpage.

When Google then looks for a webpage to support a claim made in AI Overview, such a webpage is a logical source to cite.

If you want to increase the chances of your content being used as a cited source in Google’s AI Overviews, implementing such a structure on your informational pages is the primary tactic. Chunk your content into concrete sections, each with its own topical focus relating to the overarching topic you’re covering. Ensure every section has a strong subheading that signposts what the section is about.

Google’s AI Overviews use a grounding RAG process to decrease the likelihood of hallucinations. Structuring your content in a logical way, with distinct topical sections on your pages, helps Google understand that your webpage is a viable candidate for citations in AI Overviews.

If this content chunking/segmentation tactic sounds familiar, you’re right. It’s been a staple of SEO for many years. Content segmentation with distinct topical focus per segment is also a key tactic when optimising for featured snippets and passage indexing.

ChatGPT et al

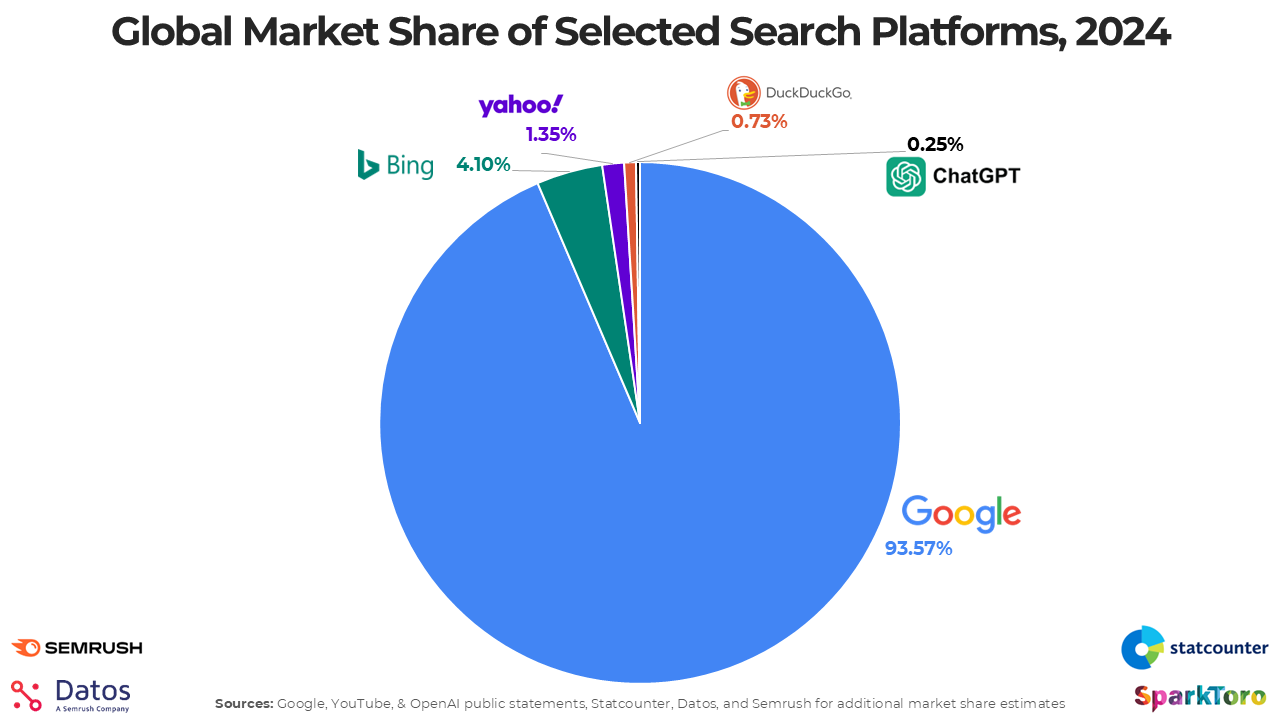

Google is not the only AI player on the market, though arguably they’re the biggest by virtue of their global search dominance. Nonetheless, some industry watchers predict ChatGPT or another AI player like Perplexity might become a threat to Google.

So far, we’re not seeing a radical shift in user behaviour. Yes, ChatGPT is growing, but not at a rate that would alarm Google. Plus, most ChatGPT queries aren’t replacing classic Google searches.

The data published so far suggests that only around 30% of prompts on ChatGPT would be ‘search equivalent’, i.e. a query that the user would have previously relied on Google for.

If we take OpenAI’s own numbers as gospel, with 1 billion prompts per day, we end up with 300 million daily search-equivalent prompts on ChatGPT. That sounds a lot, until you realise that Google handles 14 billion searches per day. And that number is also growing.

Independent traffic analysis sources are less optimistic about ChatGPT’s numbers, with some data suggestion that this AI platform handles only around 37.5 million daily search-equivalent prompts. Now that’s a 2024 number, so likely to be higher now, but still way off OpenAI’s own numbers. ChatGPT hasn’t 10xed in the last six months.

A New SEO?

Optimising for ChatGPT or any of the other LLM-based chatbots aligns with optimising for Google’s AI Overviews. This is unsurprising, as they’re all founded on the same technology. The biggest difference is that ChatGPT relies on Bing search for its RAG/grounding process.

Classic SEO has three main pillars:

Technical SEO is optimising for crawling and indexing

On-page SEO is optimising for relevancy and usefulness

Off-page SEO is optimising for authority and ranking

In the new LLM paradigm, these very same principles apply:

Technical: We need to ensure LLMs can crawl our sites and extract our content.

On-page: Content needs to be useful, accurate, and relevant to users’ prompts.

Off-page: To be a cited source, your brand needs to be a frequently mentioned authority on the topic

While there are some differences in emphasis, the same principles apply. For example, in classic SEO links are a primary source of authority, whereas in LLMs an unlinked brand mention ticks that box. But unlinked brand mentions have always had SEO value!

LLMs are another search surface to optimise for. Just like maps, news, images, videos, etc., LLMs are another arrow in the SEO industry’s quiver.

It’s why I am so resistant to any attempts at rebranding. GEO, AEO, LLMO, AIEIO, whatever you want to call it - it’s still just SEO.

I would challenge any GEO/AEO/WTFEO advocate to name me one foundational strategy that only applies to optimising for LLMs and has no relation to classic SEO in any way. If you know of such a strategy, let me know in the comments and there’s a chance you’ll have me eat my words.

AI Mode

While AI Overviews are painful but not catastrophic for websites’ traffic from Google search, there is a bigger threat on the horizon: AI Mode.

Currently in beta for users in the USA, Google’s AI Mode replaces the search result with a completely AI generated page of answers.

Similar to AI Overviews, the AI Mode will cite sources and even link directly to webpages from within the generated answers. But, as we’ve seen with AIOs, users are much less likely to click on these links compared to regular search results.

Moreover, AI Mode doesn’t appear to exclude news. I’ve seen screenshots where a user asked Google’s AI Mode to give the latest news on a topic, and it generated a summary. Yes, there were links to cited news articles, but these simply not receive anywhere near the same level of clicks as a Top Stories box.

While it’s in beta at the moment, I fully expect AI Mode to become a core Google feature in the near future. It may become an alternative tab for users to click on, as is currently the case with News, Images, Videos, etc.

But the popularity of AI generated answers could mean Google chooses AI Mode as the default search result. The classic ‘ten blue links’ could then be relegated to one of the alternative tabs at the top of the page, like the ‘Web’ tab.

If that happens, Google has truly broken its social contract with the web. Due to the radically lower traffic numbers emerging from AI generated answers, a search engine that provides such answers by default is not going to serve as a strong source of traffic for the webpages that provide it with those answers.

The impact on the free and open web, where most websites depend on traffic to survive, and Google serves as the monopolistic gatekeeper of most traffic, would be radical.

It would also, beyond a shadow of a doubt, make Google a publisher. As a publisher, Google would have to take responsibility for the content produced by its AI.

Which brings me to…

Hallucinations

Since the introduction of SGE, then AIO, and now AI Mode, people have been trying to poke holes in the answers Google’s AI provides. This has proven to be frighteningly easy.

AI Overviews are prone to what the AI industry calls ‘hallucinations’. Basically, this is when the AI makes something up that’s not actually true. I think calling that a ‘hallucination’ is too kind a phrase.

You could call it ‘lying’ instead, but that would imply that the AI knows it’s being inaccurate. But these AIs don’t know anything - they’re LLMs, probabilistic word predictors with limited reasoning capabilities - so you can’t call it lying either.

Bullshitting is probably the most accurate term to use. The AI just makes stuff up and confidently presents it to the user, without an ounce of awareness of its accuracy.

With the best effort in the world, AI companies cannot prevent their machines from spewing forth bullshit. In fact, with every new iteration of their LLMs the likelihood of bullshitting appears to increase.

Social media is rife with examples of extremely wrong answers generated by Google’s AI Overviews. Lily Ray regularly shares examples, some of which are actively dangerous if the user were to uncritically accept that answer.

Some of the more informed tech media regularly point out the problems with bullshitting in AI, especially in AI Overviews. But I don’t think enough emphasis is being placed on this rather foundational flaw in AI technology.

Truth vs Convenience

At the 2025 WAN-IFRA World News Media Congress earlier this week, AI was a common theme for many sessions across the event’s three days. Yet there was precious little mention of hallucinations inherent in LLMs. The media seemed more obsessed with how AI could help them rather than how AI would hurt them.

More importantly, I don’t feel the media as a whole takes the existential threat of AI seriously. And by ‘existential threat’ I don’t mean those dumb predictions about sentient machines causing an apocalypse.

The real threat is how AI is replacing truth with convenience.

AI-generated content is often wrong. It’s inherent in the LLM technology underpinning all the current AI chatbots. Bullshitting is in their very nature.

And a knowledgeable, prepared user can mitigate this problem, by verifying the AI generated output. But, as we see with the decreased CTR from AI Overviews, many users won’t verify. They’ll take the generated answer at face value.

The convenience of an instant answer has started to replace the difficulty of finding out what’s actually true. That is something that journalists especially should care very deeply about. At its core, journalism is about truth.

Why are journalists so unphased by technologies that are actively destroying truth? In politics, newspapers are often front and centre in the fight against authoritarianism and repressive regimes. Yet when faced with a repressive technology like AI, the default reaction seems to be passive acceptance.

Isn’t it the media’s role to expose truth to the public? If so, you should be having front-page spreads about the dangers of AI-generated bullshit (call them ‘hallucinations’ if you want to avoid profanity) and educate your readers on the risks inherent in depending on AI for any factual information.

Media has always helped shape society. Here is an opportunity to help shape how the world adopts AI, and where AI is actually appropriate and useful - and, more importantly, where it’s not. Yet, with few exceptions, most of the non-tech media is eerily silent, just quietly letting this informational apocalypse happen.

I would strongly urge you to change your passive acceptance of AI into active engagement with its dangers. And soon, before truth has been irrevocably replaced by convenience.

Miscellanea

Official Google Docs:

Interesting Articles:

Most AI spending driven by FOMO, not ROI, CEOs tell IBM - The Register

Generative AI is not replacing jobs or hurting wages at all, economists claim - The Register

How to Check Whether AI Assistants Are Distorting Your News Stories - Generative AI in the Newsroom

International Journalism Festival 2025: what we learnt in Perugia about the future of news - Reuters Institute

Five takeaways from the 2025 International Journalism Festival in Perugia - Thomson Reuters Foundation

OpenAI content boss on Google ‘ten blue links’ and arrival of ChatGPT search - Press Gazette

Trapping misbehaving bots in an AI Labyrinth - Cloudflare

Bot farms invade social media to hijack popular sentiment - Fast Company

Latest in SEO:

5 Questions about Backlinks - WTF is SEO?

New Study: The 130 Day Indexing Rule - Indexing Insight

Why updates to Google's Page Quality Rater Guidelines matter for Publishers - Leadership in SEO

5 AI Insights from Google Search Central Live in April 2025 - Aleyda Solis

As always, thanks for reading and subscribing. See you at the next one!